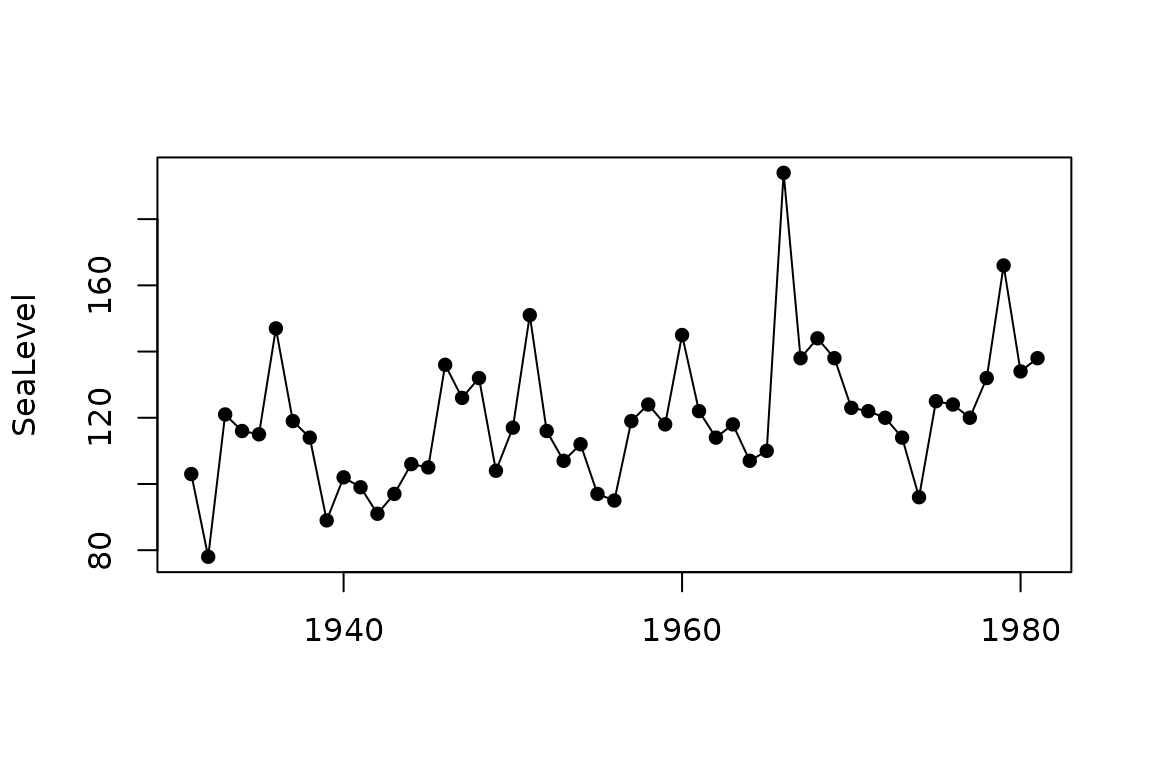

Venice example and data

This example was studied by Coles and Fawcett who both fitted time varying GEV models with linear and quadratic trend by using the ismev package. We reproduce here their results by using NSGEV and also extRemes.

Although the data contains the -largest observations, we use only the annual maxima here; These are found in column 2 of the matrix object.

data(venice, package = "ismev")

df <- data.frame(Year = venice[ , 1],

date = as.Date(sprintf( "%4d-01-01", venice[ , 1])),

SeaLevel = venice[ , 2])

df <- within(df, date <- as.Date(sprintf("%4d-01-01", Year)))

plot(SeaLevel ~ date, data = df, type = "o", pch = 16, xlab = "")

Fitting stationary GEV models

We begin by fitting a stationary GEV model.

estim <- "optim"

fitTVGEV0 <- TVGEV(data = df,

response = "SeaLevel", date = "date",

estim = estim,

loc = ~ 1)

fitismev0 <- gev.fit(xdat = df$SeaLev)

fitextRemes0 <- extRemes::fevd(x = df$SeaLev, type = "GEV")The estimated coefficients and maximised log-likelihoods are as follows.

## mu_0 sigma_0 xi_0

## NSGEV 111.0982 17.17421 -0.07672147

## ismev 111.0993 17.17549 -0.07673265

## extRemes 111.0973 17.17910 -0.07678211## NSGEV ismev extRemes

## -222.7145 -222.7145 -222.7145Linear trend

We now introduce a linear trend. We first create a dataframe to be used with the functions from extRemes and ismev, with a linear and variable a quadratic trend variable as well.

dfismev <- within(df, {t1num <- Year - 1930; t2num <- (Year - 1930)^2 })

fitTVGEV1 <- TVGEV(data = df,

response = "SeaLevel", date = "date",

design = polynomX(date = date,

degree = 2, origin = "1930-01-01"),

estim = estim,

trace = 2,

loc = ~ 1 + t1)

fitismev1 <- gev.fit(xdat = df$SeaLev,

ydat = as.matrix(dfismev[ , "t1num"]),

mul = 1)

fitextRemes1 <- extRemes::fevd(x = dfismev$SeaLev,

data = dfismev,

type = "GEV",

location.fun = ~t1num)## mu_0 mu_t1 sigma_0 xi_0

## NSGEV 96.97787 0.5645199 14.58559 -0.02750053

## ismev 96.98579 0.5641427 14.58435 -0.02731421

## extRemes 96.96317 0.5648220 14.58120 -0.02731842## NSGEV ismev extRemes

## -216.0625 -216.0626 -216.0626We can perform a likelihood-ratio test as in the references.

anova(fitTVGEV0, fitTVGEV1)## Analysis of Deviance Table

##

## df deviance W Pr(>W)

## fitTVGEV0 3 445.43

## fitTVGEV1 4 432.13 13.304 0.0002648 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Quadratic trend

We now add a quadratic term in the trend.

fitTVGEV2 <- TVGEV(data = df,

response = "SeaLevel", date = "date",

design = polynomX(date = date,

degree = 2, origin = "1930-01-01"),

estim = estim,

trace = 2,

loc = ~ 1 + t1 + t2)

fitismev2 <- gev.fit(xdat = dfismev$SeaLev,

ydat = as.matrix(dfismev[ , c("t1num", "t2num")]),

mul = c(1, 2))

fitextRemes2 <- extRemes::fevd(x = dfismev$SeaLev,

data = dfismev,

type = "GEV",

location.fun = ~t1num + t2num) The estimated coefficients and maximised log-likelihoods are as follows.

## mu_0 mu_t1 mu_t2 sigma_0 xi_0

## NSGEV 98.23632 0.4398647 0.002236958 14.62472 -0.02896788

## ismev 96.38501 0.6325000 -0.001340599 14.56492 -0.02547159

## extRemes 96.37645 0.6330981 -0.001347965 14.56132 -0.02545380## NSGEV ismev extRemes

## -216.1088 -216.0555 -216.0555So, compared to the two other packages, NSGEV::TVGEV

returns different estimated coefficients and a smaller maximised

log-likelihood, indicating a problem in the maximisation. Since the

scaling of the trend variable is often a concern, we try a change in the

time origin.

fitTVGEV2b <- TVGEV(data = df,

response = "SeaLevel", date = "date",

design = polynomX(date = date,

degree = 2, origin = "1950-01-01"),

estim = estim,

trace = 2,

loc = ~ 1 + t1 + t2)## o Analysis of formulas

## - All Variables: 't1', 't2'

## - Design Variables: 't1', 't2'

## - TS Covariates: ''

## o Mininimisation of the negative log-likelihood

## using `stats::optim`.

## Nelder-Mead direct search function minimizer

## function value for initial parameters = 216.189225

## Scaled convergence tolerance is 3.22147e-06

## Stepsize computed as 10.733734

## BUILD 6 99999999999999996863366107917975552.000000 216.189225

## LO-REDUCTION 8 99999999999999996863366107917975552.000000 216.189225

## SHRINK 15 99999999999999996863366107917975552.000000 216.189225

## SHRINK 22 99999999999999996863366107917975552.000000 216.189225

## SHRINK 29 99999999999999996863366107917975552.000000 216.189225

## HI-REDUCTION 31 395.055639 216.189225

## HI-REDUCTION 33 345.170389 216.189225

## HI-REDUCTION 35 326.717234 216.189225

## LO-REDUCTION 37 293.660970 216.189225

## HI-REDUCTION 39 260.206192 216.189225

## HI-REDUCTION 41 252.579402 216.189225

## HI-REDUCTION 43 245.565293 216.189225

## HI-REDUCTION 45 242.037453 216.189225

## LO-REDUCTION 47 237.911955 216.189225

## HI-REDUCTION 49 232.543335 216.189225

## HI-REDUCTION 51 226.024758 216.189225

## HI-REDUCTION 53 222.924849 216.189225

## LO-REDUCTION 55 220.136778 216.189225

## HI-REDUCTION 57 217.498223 216.189225

## LO-REDUCTION 59 217.234803 216.181666

## HI-REDUCTION 61 217.036130 216.181666

## HI-REDUCTION 63 216.637215 216.181666

## LO-REDUCTION 65 216.438856 216.145144

## HI-REDUCTION 67 216.368823 216.145144

## LO-REDUCTION 69 216.290062 216.126208

## HI-REDUCTION 71 216.214517 216.126208

## REFLECTION 73 216.189225 216.090001

## REFLECTION 75 216.181666 216.086023

## HI-REDUCTION 77 216.151985 216.086023

## LO-REDUCTION 79 216.145144 216.077084

## LO-REDUCTION 81 216.126208 216.077084

## HI-REDUCTION 83 216.096534 216.077084

## LO-REDUCTION 85 216.090001 216.068836

## HI-REDUCTION 87 216.086023 216.068836

## HI-REDUCTION 89 216.082751 216.068836

## HI-REDUCTION 91 216.077997 216.068836

## HI-REDUCTION 93 216.077084 216.068836

## REFLECTION 95 216.071708 216.062770

## LO-REDUCTION 97 216.071403 216.062770

## LO-REDUCTION 99 216.070698 216.062770

## REFLECTION 101 216.069431 216.062323

## LO-REDUCTION 103 216.068836 216.062323

## LO-REDUCTION 105 216.066577 216.062082

## REFLECTION 107 216.065654 216.061685

## LO-REDUCTION 109 216.064333 216.061073

## REFLECTION 111 216.062770 216.060887

## REFLECTION 113 216.062323 216.059933

## LO-REDUCTION 115 216.062082 216.059933

## LO-REDUCTION 117 216.061685 216.059933

## HI-REDUCTION 119 216.061073 216.059933

## REFLECTION 121 216.060887 216.059139

## LO-REDUCTION 123 216.060257 216.059139

## REFLECTION 125 216.060079 216.059036

## LO-REDUCTION 127 216.060019 216.058951

## HI-REDUCTION 129 216.059933 216.058951

## LO-REDUCTION 131 216.059423 216.058910

## HI-REDUCTION 133 216.059265 216.058910

## REFLECTION 135 216.059139 216.058821

## REFLECTION 137 216.059036 216.058710

## LO-REDUCTION 139 216.059026 216.058710

## REFLECTION 141 216.058951 216.058606

## REFLECTION 143 216.058910 216.058555

## LO-REDUCTION 145 216.058821 216.058555

## LO-REDUCTION 147 216.058789 216.058555

## REFLECTION 149 216.058710 216.058408

## LO-REDUCTION 151 216.058606 216.058408

## LO-REDUCTION 153 216.058568 216.058408

## LO-REDUCTION 155 216.058559 216.058408

## REFLECTION 157 216.058555 216.058372

## LO-REDUCTION 159 216.058456 216.058364

## EXTENSION 161 216.058435 216.058311

## REFLECTION 163 216.058432 216.058308

## EXTENSION 165 216.058408 216.058245

## REFLECTION 167 216.058372 216.058212

## LO-REDUCTION 169 216.058364 216.058212

## REFLECTION 171 216.058311 216.058208

## REFLECTION 173 216.058308 216.058159

## LO-REDUCTION 175 216.058245 216.058150

## LO-REDUCTION 177 216.058237 216.058150

## LO-REDUCTION 179 216.058212 216.058150

## LO-REDUCTION 181 216.058208 216.058148

## REFLECTION 183 216.058174 216.058140

## HI-REDUCTION 185 216.058159 216.058140

## LO-REDUCTION 187 216.058150 216.058135

## HI-REDUCTION 189 216.058150 216.058135

## LO-REDUCTION 191 216.058148 216.058130

## LO-REDUCTION 193 216.058143 216.058130

## REFLECTION 195 216.058140 216.058122

## HI-REDUCTION 197 216.058135 216.058122

## REFLECTION 199 216.058135 216.058119

## HI-REDUCTION 201 216.058130 216.058119

## HI-REDUCTION 203 216.058130 216.058119

## LO-REDUCTION 205 216.058126 216.058119

## REFLECTION 207 216.058125 216.058118

## LO-REDUCTION 209 216.058124 216.058118

## LO-REDUCTION 211 216.058122 216.058118

## EXTENSION 213 216.058120 216.058113

## EXTENSION 215 216.058119 216.058111

## LO-REDUCTION 217 216.058119 216.058111

## LO-REDUCTION 219 216.058119 216.058111

## LO-REDUCTION 221 216.058118 216.058111

## EXTENSION 223 216.058113 216.058102

## LO-REDUCTION 225 216.058113 216.058102

## LO-REDUCTION 227 216.058112 216.058102

## EXTENSION 229 216.058112 216.058100

## REFLECTION 231 216.058111 216.058099

## EXTENSION 233 216.058107 216.058085

## LO-REDUCTION 235 216.058102 216.058085

## LO-REDUCTION 237 216.058102 216.058085

## LO-REDUCTION 239 216.058100 216.058085

## LO-REDUCTION 241 216.058099 216.058085

## LO-REDUCTION 243 216.058094 216.058085

## EXTENSION 245 216.058093 216.058075

## LO-REDUCTION 247 216.058092 216.058075

## EXTENSION 249 216.058087 216.058070

## EXTENSION 251 216.058086 216.058064

## EXTENSION 253 216.058085 216.058053

## EXTENSION 255 216.058076 216.058034

## LO-REDUCTION 257 216.058075 216.058034

## LO-REDUCTION 259 216.058070 216.058034

## EXTENSION 261 216.058064 216.058014

## LO-REDUCTION 263 216.058053 216.058014

## EXTENSION 265 216.058040 216.058001

## REFLECTION 267 216.058036 216.058000

## EXTENSION 269 216.058034 216.057967

## LO-REDUCTION 271 216.058022 216.057967

## LO-REDUCTION 273 216.058014 216.057967

## LO-REDUCTION 275 216.058001 216.057967

## LO-REDUCTION 277 216.058000 216.057967

## LO-REDUCTION 279 216.057991 216.057967

## LO-REDUCTION 281 216.057991 216.057967

## EXTENSION 283 216.057981 216.057950

## LO-REDUCTION 285 216.057980 216.057950

## EXTENSION 287 216.057973 216.057938

## LO-REDUCTION 289 216.057970 216.057938

## EXTENSION 291 216.057967 216.057933

## LO-REDUCTION 293 216.057957 216.057933

## LO-REDUCTION 295 216.057950 216.057933

## LO-REDUCTION 297 216.057949 216.057933

## REFLECTION 299 216.057940 216.057930

## EXTENSION 301 216.057938 216.057925

## HI-REDUCTION 303 216.057937 216.057925

## EXTENSION 305 216.057935 216.057922

## LO-REDUCTION 307 216.057933 216.057922

## EXTENSION 309 216.057931 216.057912

## LO-REDUCTION 311 216.057930 216.057912

## LO-REDUCTION 313 216.057925 216.057912

## EXTENSION 315 216.057925 216.057907

## EXTENSION 317 216.057922 216.057899

## EXTENSION 319 216.057922 216.057881

## LO-REDUCTION 321 216.057916 216.057881

## LO-REDUCTION 323 216.057912 216.057881

## REFLECTION 325 216.057907 216.057879

## EXTENSION 327 216.057899 216.057850

## LO-REDUCTION 329 216.057891 216.057850

## EXTENSION 331 216.057881 216.057818

## LO-REDUCTION 333 216.057881 216.057818

## LO-REDUCTION 335 216.057879 216.057818

## EXTENSION 337 216.057857 216.057764

## LO-REDUCTION 339 216.057850 216.057764

## EXTENSION 341 216.057836 216.057727

## LO-REDUCTION 343 216.057832 216.057727

## EXTENSION 345 216.057818 216.057632

## LO-REDUCTION 347 216.057796 216.057632

## EXTENSION 349 216.057764 216.057543

## LO-REDUCTION 351 216.057741 216.057543

## EXTENSION 353 216.057727 216.057423

## EXTENSION 355 216.057677 216.057326

## EXTENSION 357 216.057632 216.057110

## LO-REDUCTION 359 216.057568 216.057110

## LO-REDUCTION 361 216.057543 216.057110

## EXTENSION 363 216.057423 216.056893

## EXTENSION 365 216.057326 216.056826

## EXTENSION 367 216.057210 216.056575

## LO-REDUCTION 369 216.057114 216.056575

## REFLECTION 371 216.057110 216.056543

## LO-REDUCTION 373 216.056893 216.056543

## LO-REDUCTION 375 216.056826 216.056543

## LO-REDUCTION 377 216.056750 216.056543

## EXTENSION 379 216.056594 216.056419

## LO-REDUCTION 381 216.056575 216.056419

## EXTENSION 383 216.056574 216.056323

## LO-REDUCTION 385 216.056550 216.056323

## EXTENSION 387 216.056543 216.056157

## REFLECTION 389 216.056421 216.056146

## LO-REDUCTION 391 216.056419 216.056146

## EXTENSION 393 216.056325 216.056035

## EXTENSION 395 216.056323 216.055849

## LO-REDUCTION 397 216.056183 216.055849

## EXTENSION 399 216.056157 216.055657

## LO-REDUCTION 401 216.056146 216.055657

## LO-REDUCTION 403 216.056035 216.055657

## LO-REDUCTION 405 216.055964 216.055657

## LO-REDUCTION 407 216.055849 216.055657

## LO-REDUCTION 409 216.055783 216.055657

## REFLECTION 411 216.055770 216.055656

## EXTENSION 413 216.055741 216.055597

## LO-REDUCTION 415 216.055737 216.055597

## REFLECTION 417 216.055689 216.055577

## REFLECTION 419 216.055657 216.055546

## LO-REDUCTION 421 216.055656 216.055546

## LO-REDUCTION 423 216.055609 216.055546

## REFLECTION 425 216.055597 216.055539

## REFLECTION 427 216.055577 216.055499

## HI-REDUCTION 429 216.055553 216.055499

## LO-REDUCTION 431 216.055547 216.055499

## HI-REDUCTION 433 216.055546 216.055499

## LO-REDUCTION 435 216.055539 216.055499

## LO-REDUCTION 437 216.055534 216.055499

## EXTENSION 439 216.055521 216.055469

## LO-REDUCTION 441 216.055520 216.055469

## LO-REDUCTION 443 216.055509 216.055469

## LO-REDUCTION 445 216.055505 216.055469

## EXTENSION 447 216.055499 216.055446

## LO-REDUCTION 449 216.055497 216.055446

## REFLECTION 451 216.055472 216.055440

## LO-REDUCTION 453 216.055470 216.055440

## REFLECTION 455 216.055469 216.055439

## REFLECTION 457 216.055453 216.055435

## REFLECTION 459 216.055449 216.055433

## LO-REDUCTION 461 216.055446 216.055432

## LO-REDUCTION 463 216.055440 216.055432

## HI-REDUCTION 465 216.055439 216.055432

## REFLECTION 467 216.055436 216.055431

## LO-REDUCTION 469 216.055435 216.055431

## LO-REDUCTION 471 216.055434 216.055431

## Exiting from Nelder Mead minimizer

## 473 function evaluations used## mu_0 mu_t1 mu_t2 sigma_0 xi_0

## NSGEV 108.50613 0.5793764 -0.001374096 14.55759 -0.02535797

## ismev 96.38501 0.6325000 -0.001340599 14.56492 -0.02547159

## extRemes 96.37645 0.6330981 -0.001347965 14.56132 -0.02545380

c("NSGEV" = logLik(fitTVGEV2b),

"ismev" = -fitismev2$nllh,

"extRemes" = -fitextRemes2$results$value)## NSGEV ismev extRemes

## -216.0554 -216.0555 -216.0555The log-likelihood maximised with NSGEV::TVGEV is now

identical to that of the other two packages so a simple change of origin

solved the problem. The resulting model in the TVGEV object

is simply a re-parameterisation of the former, and the comparison with

the other packages needs some care because the trends for the location

parameter are no longer identical. The new parameterisation facilitates

the maximisation. To complete the comparison we can use 1950 as an

origin for all models.

dfismevb <- within(df, {t1num <- Year - 1950; t2num <- (Year - 1950)^2 })

fitismev2b <- gev.fit(xdat = dfismevb$SeaLev,

ydat = as.matrix(dfismevb[ , c("t1num", "t2num")]),

mul = c(1, 2))

fitextRemes2b <- extRemes::fevd(x = dfismevb$SeaLev,

data = dfismevb,

type = "GEV",

location.fun = ~t1num + t2num)## mu_0 mu_t1 mu_t2 sigma_0 xi_0

## NSGEV 108.5061 0.5793764 -0.001374096 14.55759 -0.02535797

## ismev 108.5073 0.5798072 -0.001392904 14.55171 -0.02536854

## extRemes 108.5062 0.5791653 -0.001358444 14.56185 -0.02550671

c("NSGEV" = logLik(fitTVGEV2b),

"ismev" = -fitismev2b$nllh,

"extRemes" = -fitextRemes2b$results$value)## NSGEV ismev extRemes

## -216.0554 -216.0555 -216.0555We now can proceed to the likelihood ratio test.

anova(fitTVGEV1, fitTVGEV2b)## Analysis of Deviance Table

##

## df deviance W Pr(>W)

## fitTVGEV1 4 432.13

## fitTVGEV2b 5 432.11 0.014213 0.9051The test tells that adding a quadratic trend term does not improve the model.

Findings

With this example it appears that in NSGEV::TVGEV the

maximisation of log-likelihood can fail to find the best value, still

giving an indication of convergence. Here the problem is due to the

order of magnitude of one covariate, namely the quadratic term in the

polynomial basis. It was fixed by simply changing the origin of

time.

With the current implementation of NSGEV when formulas involving several basis covariates are used, we recommend to fit models with increasing complexity, and check that the log-likelihood increases when adding a new covariate.

Several solutions can be to improve the code of

NSGEV::TVGEV in future versions.

Allow the user to give initial values for the parameters.

Try a “multistart” optimisation with randomly selected initial values.

Scale the design matrix by subtracting its mean to each column, and by dividing each column by its standard deviation. Although this is a quite simple application of the

scalemethod for the"matrix"class, the estimated coefficients should then be translated back in the original scale, which can be quite tedious to implement.